Dev’s Journal 7

Documenting Systems, Zero Knowledge Proofs, and Aztec Network/Connect

This past week I’ve been helping finish up audit remediations on a large project at OlympusDAO, and I’ve started doing research on a couple new designs for upcoming ones. It’s little bit of a lull period for hardcore dev work. In addition to that, I’ve been thinking more long-term about where to prioritize my efforts in the web3 ecosystem to drive towards the type of future that is exciting to me. I spent a bit of time writing about that (coming soon) and working on my Bonds paper (coming sometime?). Therefore, this Dev’s Journal will be a bit abridged. This week, I cover documenting and explaining systems to others, Zero Knowledge Proofs, and the Aztec Network ZK Rollup.

Documenting and Explaining Systems to Others

Good documentation has sections that explain the context, concepts, and architecture of the system along with a technical reference of the individual contracts ABIs. The first part is where the team has to translate their vision and design of the system into a consumable format. The latter can be handled by well-documented code (with NatSpec) and static documentation generators.

Writing good conceptual documentation to explain a system to both other technical practitioners and users is the hard part. Here are some suggestions to make it a little bit easier.

- Write good requirements documentation to begin with and have a design prior to writing code (felt like I had to say it). Then, leverage the requirements documents originally created for the system and review for changes that were made during the implementation.

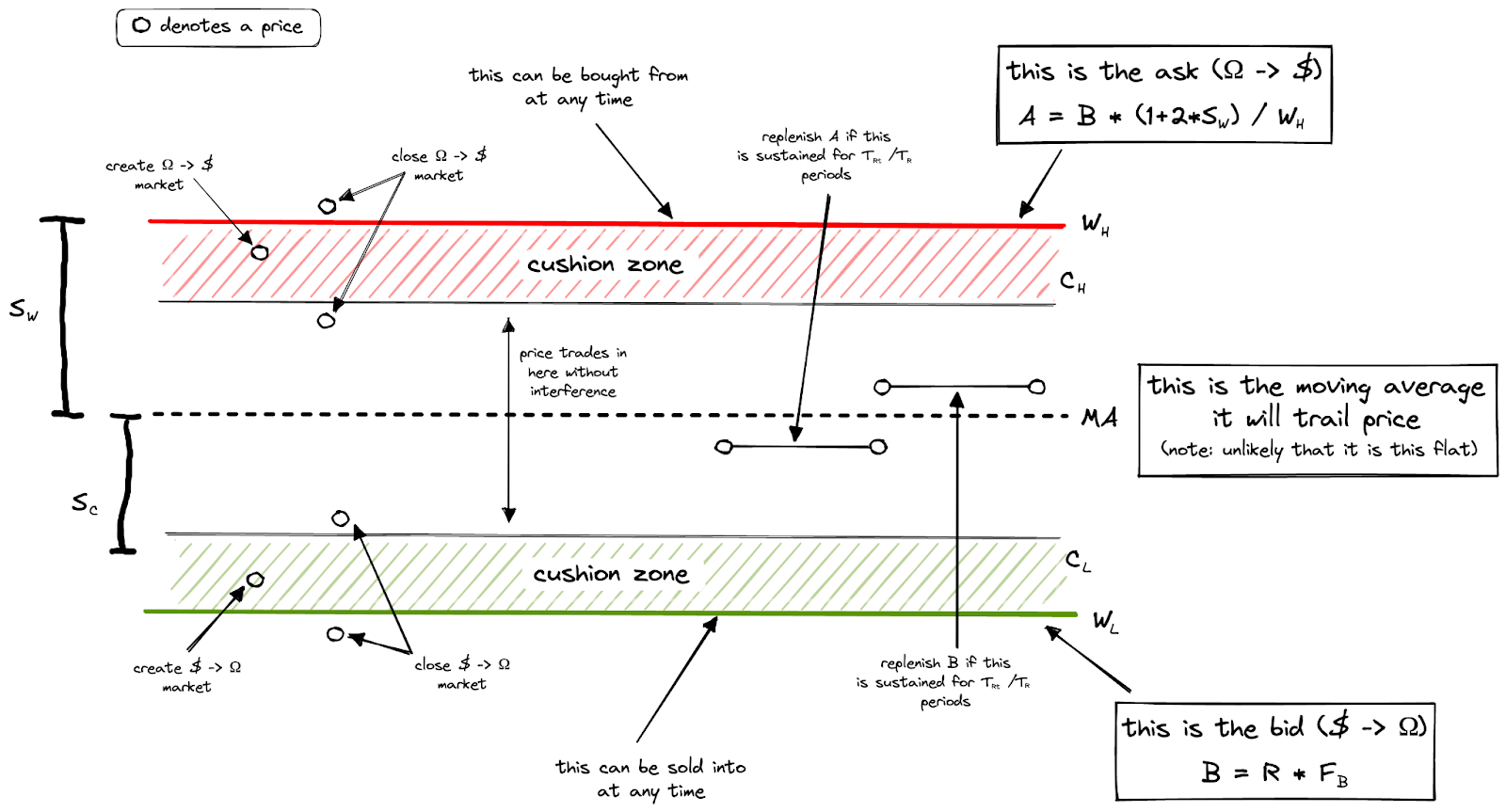

- Use graphs, visuals, or other non-verbal/non-technical aids to demonstrate what the system does. Here’s an example for the new OlympusDAO Range system as shown in Zeus’ Range-Bound Stability paper that defined the design for it:

- Provide context for the motivation behind the system and the problem it solves. Outsiders will care more about a system if they understand what problem it solves. Additional color on design decisions can help them understand the trade offs you made as well.

On the technical side, there are tools that can help generate documentation. Code that is well documented with NatSpec can be used to generate documentation automatically with the solidity compiler. This is output as a JSON file which can be consumed by various documentation hosting services.

solc --devdoc exampleContract.sol

solc --userdoc exampleContract.sol

There are multiple documentation generators that abstract this process and create a static site from your NatSpec documentation. These include OpenZeppenlin’s solidity-docgen, the Hardhat docgen plugin by Nick Barry, and the Hardhat Dodoc plugin by PrimitiveFi.

Foundry doesn’t currently support documentation generation in forge, but there is a forge doc feature being actively developed. The intent is to combine best practices from existing Solidity doc generators and docs.rs.

Learning about Zero Knowledge Proofs (ZKPs)

I spent some time this week reading up on Zero Knowledge Proofs (ZKPs). I have understood the concept at a high-level until now, but I wanted to dive in a bit more. They come in different flavors depending on the the implementation, but there are some basics that are more or less universal. This YouTube video and this article were good introductions.

A Zero Knowledge Proof (ZKP) is a way for one party (the Prover) to convey to another party (the Verifier) that a statement is true without revealing any information about the statement.

The general motivation for a ZKP is as follows: If you have a secret you don't want to tell anyone, but you want to prove that you know the secret, which has many applications in cryptography. A simple example from the video I referenced is Where's Waldo?. If you're looking at a Where's Waldo book with a friend, you find Waldo and want to tell your friend you know the answer without revealing it to them.

There are three main requirements to implement ZKPs.

- Completeness - If the Prover is honest and does have a secret, and the Verifier is honest, then the protocol will work.

- Soundness - If the Prover is not honest and does have a secret, then the Prover will not be able to prove the false value. A number of implementations relax this requirement, but make it extremely difficult to be dishonest and prove something false.

- Zero Knowledge - If the Prover is honest and does have a secret, then the Verifier will only learn that the Prover is honest and not the secret (or anything else).

ZKPs are promising because their potential applicability is very broad. In computer science, there is a concept called computational complexity. The basic definition is that certain classes of problems are harder to solve than others. Problems can be grouped into buckets based on the amount of time (in cycles relative to the quantity of inputs and computations) and memory space they require. Common complexity groups are “P”, which is the group of problems that have “polynomial-time” complexity, and “NP”, which is the group of problems that have “non-deterministic polynomial-time” complexity. P is a subset of NP since non-deterministic time is greater than deterministic. There has been a lot of research done on these different classes of problems. One result is that while it is unknown if NP problems can be solved in P time (famous P vs. NP problem), the solution to a NP problem can be verified in P time. This is good for a cryptographic system since a verifier can check a problem solution faster than it was computed by the prover.

From the video, I learned that there is a result which says that all PSPACE problems (which require polynomial space complexity to solve and includes P and NP) can be proven with an interactive proof. Additionally, all interactive proofs can be proven by a ZKP. Therefore, all PSPACE problems can be proven by ZKPs. Therefore, ZKPs can be used to prove the correctness of solutions for a very broad range of problems.

Researching Aztec Network

The Aztec Network is a privacy-enabled ZK-rollup on Ethereum that uses PLONK proving mechanisms. Aztec Connect was recently released and is a private bridge for using Ethereum DeFi apps via Aztec. It is essentially a VPN for Ethereum and lowers transaction costs for all users by batching them together. Users interact directly with L1 liquidity sources which removes the need for liquidity migration and fragmentation by protocols. Aztec Connect relies on 3 components:

- L1 DeFi Protocols (such as Curve, Element, Lido, Aave, etc.)

- Aztec Connect Bridge Contracts - interfaces that allow the Aztec rollup to communicate with L1 DeFi Protocols

- Aztec Connect SDK - front-end library that allows users to create and submit transactions to the Aztec rollup. Equivalent to ethers.js for Ethereum.

Interestingly, there are some nice properties for existing Ethereum protocols that make this much easier to support for them. Specifically:

- No additional audits or redeployments of core contracts for L1 protocols are required

- Integration only requires a bridge contract interfaces to be deployed. Although, these aren’t as “simple” as described, especially for more complex use cases.

- There is no fragmentation of L1 liquidity or loss of composability

At a technical level, Aztec creates privacy for transactions by flipping the ownership model of Ethereum around. Instead of an address owning assets, assets are put into buckets called “notes” and point to their owner. This is similar to how Polygon Nightfall works (which I reviewed previously), except Aztec allows notes to be destroyed and new combinations of notes created with the same balance as the previous ones to allow more flexibility in the amounts that can be transferred.

I bridged a little ETH over to Aztec Network to test it out using the new zk.money app. The current uses for funds on the network are swapping ETH for stETH on Curve and swapping DAI for a fixed rate return on Element Finance. You can also privately send funds to another address. Transaction costs are reduced by batching transactions from multiple users and sending them together as one set of call data to L1. Based on this, the longer you’re willing to wait for your transaction to process, the cheaper it will generally be. One limitation of this structure is that use cases which require acting in real-time to take advantage of a market opportunity, such as Olympus Bonds, would not be as efficient.

As with other ZK rollups, funds going in and out of the rollup from mainnet are not shielded. There are currently caps on the amount of funds that you can swap at once on the network, which is designed to protect the privacy of transactions (a single large transaction provides information about who sent it, even if private, because there would only be a certain number of addresses that had bridged a large amount into the rollup).

Currently Reading

Cryptonomicon - Neal Stephenson. This is quite a long audio book, but it’s been a lot of fun. I hope to finish it next week. I also don’t speed books up when I read/listen for leisure so it’s taken awhile at a pace of ~1 hour a day.